More or Less Collectors Moving to Azure

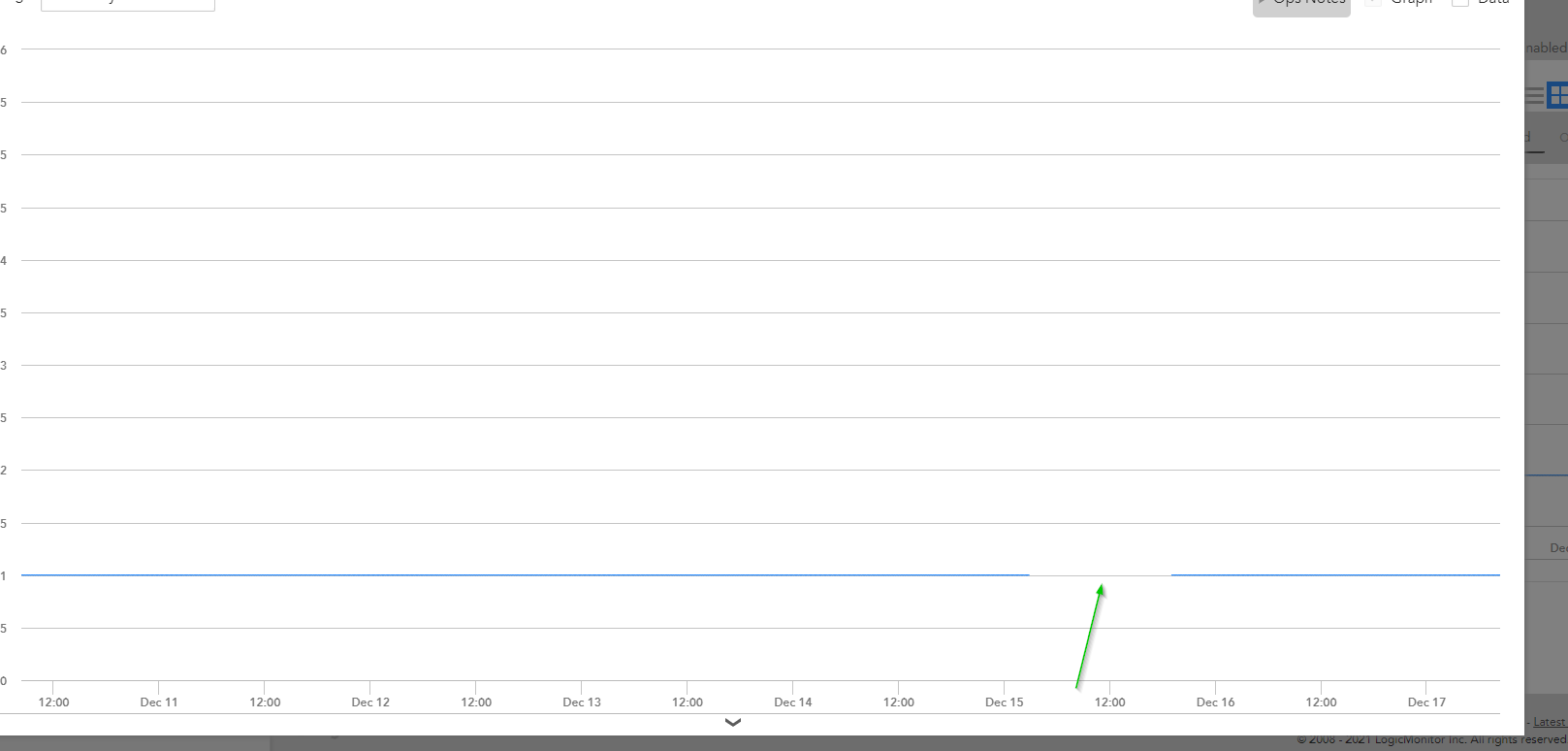

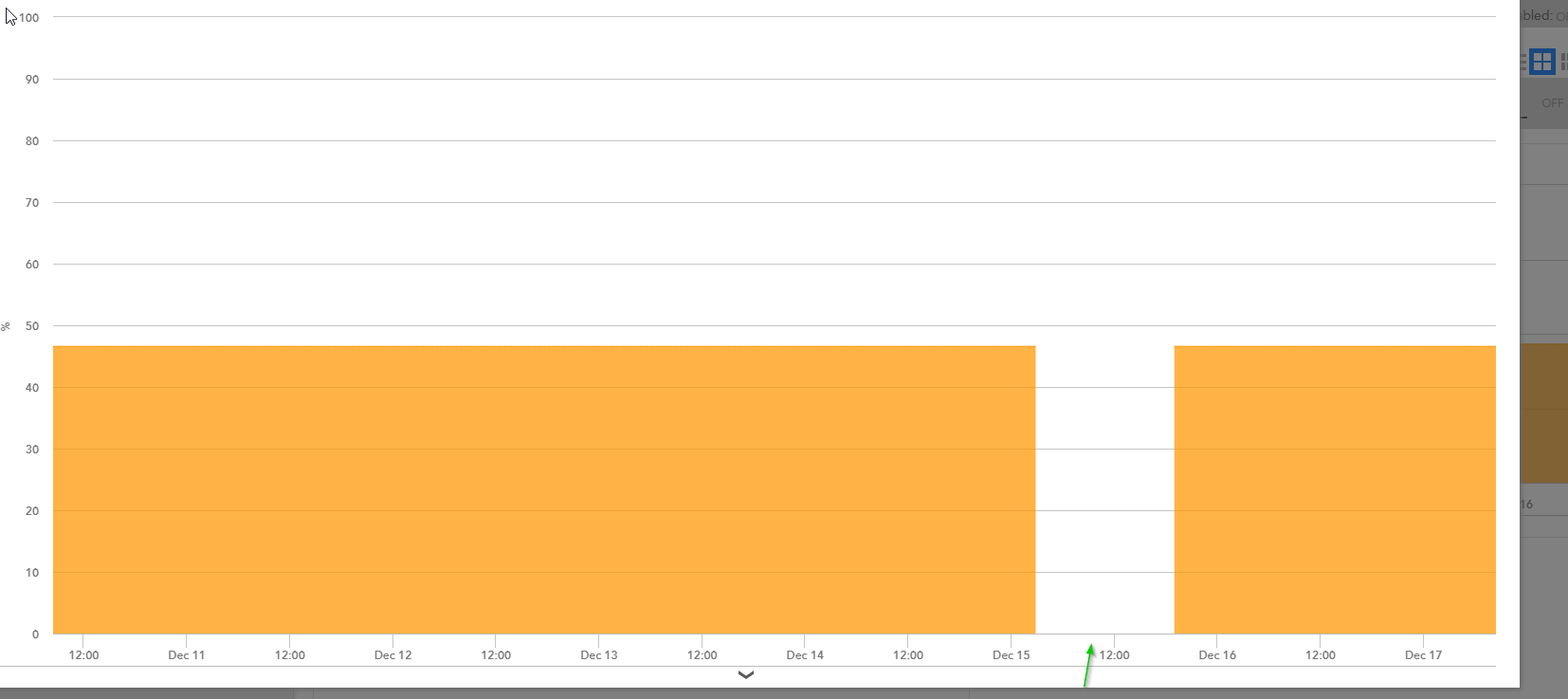

We are looking to move our on-prem bare metal collectors in our datacenters upto azure ubuntu vm's. We've come up with these estimates and currently our largest on-going problem is dealing with "No Data" issues where we have gaps in data collection on collectors along with the "All Nan" data collection outputs. The standard tag line from LM support is placing collectors as close to the resource as possible. These collectors will be monitoring the resources in that region on MPLS connections, so our latency will be around 50-100ms. What recommendations can you guys give on the direction we should take?

Small Collectors Needed Per Region - 141 Collectors Needed

Central US - North/South America Devices 1692 / 2 = 846 Devices * Average Instance size 325 / Small Collector 7k = 39

Germany West Central - EMEA Devices 670 / 2 = 335 * Average Instance size 325 / Small Collector 7k = 15

North Europe - EMEA Devices 670 / 2 = 335 * Average Instance size 325 / Small Collector 7k = 15

South India - 722 Devices * Average Instance size 325 / Small Collector 7k = 33

West US2 - North/South America Devices 1692 / 2 = 846 Devices * Average Instance size 325 / Small Collector 7k = 39

Medium Collectors Needed Per Region - 117 Collectors Needed

Central US - North/South America Devices 1692 / 2 = 846 Devices * Average Instance size 325 / Medium Collector 10k = 27

Germany West Central - EMEA Devices 670 / 2 = 335 * Average Instance size 325 / Medium Collector 10k = 10

North Europe - EMEA Devices 670 / 2 = 335 * Average Instance size 325 / Medium Collector 10k = 10

South India - 722 Devices * Average Instance size 325 / Medium Collector 10k = 33

West US2 - North/South America Devices 1692 / 2 = 846 Devices * Average Instance size 325 / Medium Collector 10k = 27

Large Collectors Needed Per Region - 68 Collectors Needed

Central US - North/South America Devices 1692 / 2 = 846 Devices * Average Instance size 325 / Large Collector 14k = 19

Germany West Central - EMEA Devices 670 / 2 = 335 * Average Instance size 325 / Large Collector 14k = 7

North Europe - EMEA Devices 670 / 2 = 335 * Average Instance size 325 / Large Collector 14k = 7

South India - 722 Devices * Average Instance size 325 / Large Collector 14k = 16

West US2 - North/South America Devices 1692 / 2 = 846 Devices * Average Instance size 325 / Large Collector 14k = 19

Extra Large Collectors Needed Per Region - 47 Collectors Needed

Central US - North/South America Devices 1692 / 2 = 846 Devices * Average Instance size 325 / Large Collector 20k = 13

Germany West Central - EMEA Devices 670 / 2 = 335 * Average Instance size 325 / Large Collector 20k = 5

North Europe - EMEA Devices 670 / 2 = 335 * Average Instance size 325 / Large Collector 20k = 5

South India - 722 Devices * Average Instance size 325 / Large Collector 20k = 11

West US2 - North/South America Devices 1692 / 2 = 846 Devices * Average Instance size 325 / Large Collector 20k = 13

Double Extra Large Collectors Needed Per Region - 32 Collectors Needed

Central US - North/South America Devices 1692 / 2 = 846 Devices * Average Instance size 325 / Large Collector 28k = 9

Germany West Central - EMEA Devices 670 / 2 = 335 * Average Instance size 325 / Large Collector 28k = 3

North Europe - EMEA Devices 670 / 2 = 335 * Average Instance size 325 / Large Collector 28k = 3

South India - 722 Devices * Average Instance size 325 / Large Collector 28k = 8

West US2 - North/South America Devices 1692 / 2 = 846 Devices * Average Instance size 325 / Large Collector 28k = 9